Since Apple decided to release the breakthrough camera systems in iPhone 7+, portrait mode started its evolution. The 12-megapixel camera includes optical image stabilization on both iPhone 7 and iPhone 7 Plus, and a larger ƒ/1.8 aperture and 6-element lens enable brighter, more detailed photos and videos, and a wide color capture allows for more vibrant colors with more detail. The Machine Learning technology used separates the background from the foreground to achieve amazing portraits once possible only with DSLR cameras.

Ever since the release of this phone, all flagship phones started using dual cameras and some companies even took it to a whole new level, along with Apple. The idea of this mode kept getting better and better, so here we are now with smartphones that have the ability to shoot photos with quality as high as a professional camera.Take a look at the great video, presented by Marques Brownlee.

How well has it gotten?

In fact, smartphones like Google Pixel 2, iPhone X and Galaxy Not 8 use high-quality cameras that are really close to professional ones, if not the same as them. The one that they are compared to is the Hasselblad X1D, which is a device that could be found in a professional photographer’s set. It is highly appreciated due to the high-quality pictures it takes, along with the shallow-depth of field effect and medium-format sensor.In digital photography, medium format refers either to cameras adapted from medium format film models or to cameras making use of sensors larger than that of a 35mm film frame.

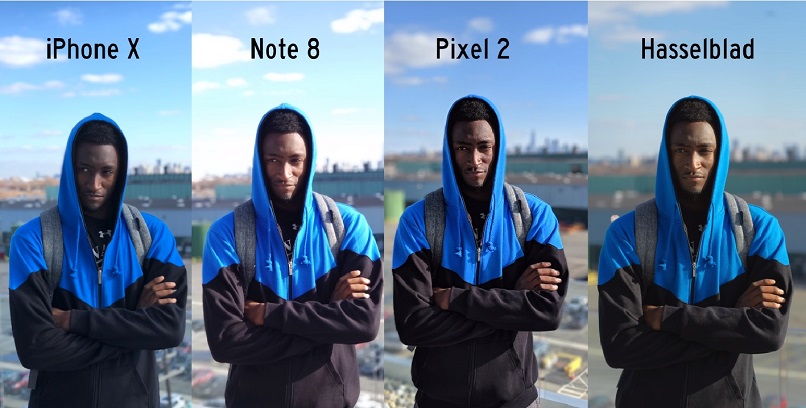

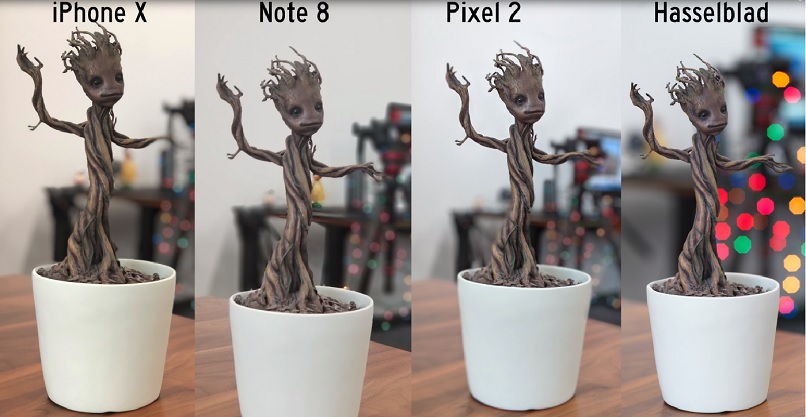

In the video, Marques Brownlee compares the quality of the cameras between Google Pixel 2, Samsung Galaxy Note 8, Apple iPhone X and the professional Hasselblad X1D. The results of all photos are great, but there are differences in terms of quality shots and how all of these portrait modes work. Here’s a comparison, so you can have an idea of what I’m talking about.

Edge Detection

What differs most in these 4 photos is the edge detection. This means that all cameras filter the edges of an image differently and enhance the edge-containing areas by sharpening them and making them clearer. The professional Hasselblad doesn’t need that technology as it has a plain of focus – if the object is out of the range of plain of focus, it’ll be automatically blurred, and everything that’s nor too close or far from it, will be sharpened out. This technology had to be revolutionized in smartphones, as the lens are way smaller and everything is within the plain of focus, so defining the edges had to go through some type of innovation.

Different phones apply this technique in a different way. The iPhone X and Galaxy Note 8 have dual cameras and use the difference between the two cameras to sense distance and diagnose what to blur, and what to sharpen out. On the other hand, the Google Pixel 2 blurs backgrounds using a single camera, machine learning and a dual-pixel sensor to help judge depth.

When in portrait mode, Apple tends to focus on the details of the face and slightly blur everything else, which sometimes doesn’t look great. The Note 8 does a pretty good job with the edge detection, but none of those phones can compare to the quality of the Google Pixel 2.

Blur Variation

Another big difference between the explained high-end models is the blur variation. When you want to come out with the best shot possible, you have to pay attention to how the object’s being presented. You have to emphasize on it by sharpening it, while the background has to be perfectly blurred. It sounds simple, but its a bit more complex than you’d expect. With professional cameras, blurring the background works by analyzing which objects are closer to the camera and emphasizing on them, and which are further and blurred the most. The background right behind the photographed objects are slightly blurred, and as distance increases, the blur effect does as well too.

What’s the good thing about smartphone cameras, is that some of them give you a chance of preview before you take the shot. This may be crucial because we all know that in photography you can never catch the same moment twice. By giving you a preview of the shot, you can quickly adjust the camera and fix whatever you have to. Portrait mode develops really quickly and in the last few years, we were able to witness a big leap in the way smartphone cameras work. The iPhone 8 even smartly figures out whether you are within the range of portrait mode and let you know where to position yourself, and if you don’t do so they take a regular selfie without the portrait mode on. Since the technology is mostly based on human-like objects and work the best with such, you may think how it works with non-human ones, like pets? Smartphone cameras actually do work pretty well with pets, but if you decide to take a close-up photo of other items and there’s lots of stuff going on in the background, then it may seem a little bit strange. There are still small adjustments to the “Portrait Mode” needed, which I am sure we’ll see soon. Due to the quick development of phone cameras, now we are able to take professional-grade cameras in our pockets and capture important moments anywhere and anytime.